Making Thanos Face the Avengers

One of the most remarkable aspects of the latest Avengers film is how much focus, screen time and empathy there is for the film’s antagonist Thanos. It is not uncommon for villains to be criticized as one dimensional or lacking motivation. Often, the plot provides little in motivation and the character, in turn is rarely seen with any subtlety. InAvengers Infinity War,Thanos is a character that is far from a typical pantomime cartoon villain. Instead, we see Thanos as a complex character with a nuanced performance based on the acting of Josh Brolin.

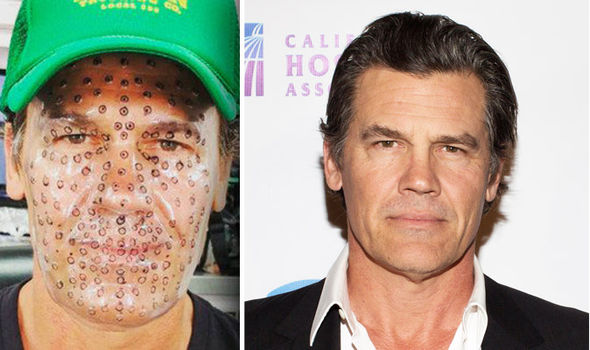

One of the main reasons Brolin could deliver such a subtle and measured performance is that he saw the first complex Thanos test from Digital Domain at the start of principle photography (see below) and recognised how well the process could work in maintaining the subtlety of his performance throughout the pipeline.

In the film, the Thanos character was primarily produced by Digital Domain, who did 400+ shots. They were joined by Weta who handled the major fight on Titan between Thanos and Dr Strange, Star-lord, Spiderman, Mantus, Drax and Ironman.

Digital Domain puts a face on evil

VFX Supervisor Kelly Port headed the Digital Domain team who developed the new face pipeline forAvengers: Infinity War.

Marvel was very aware from the start of this film that with over 40 minutes of Thanos on screen, if the character did not work, the film would not work. As a result they engaged Digital Domain three or four months before the shoot to do extensive testing and development.

The Josh Brolin’s test

This early commitment by Marvel was pivotal in the way Josh Brolin would play Thanos.

The test involved three or four shots of Josh Brolin that were filmed with the actor talking to the directors. Digital Domain took this footage, which wasnotscripted material from the film, and placed Thanos in the Throne room set (next to the torture room), in a fully rendered and final quality shot sequence.

This test sequence was completed by Digital Domain ready for the first day of principle photography. «It was the first day of shooting and we were in the trailer and it wasKevin Feige and these Marvel executives, along with the Russo brothers (the Directors) and Brolin. We were standing in the corner while Josh Brolin was reviewing the test, and already the Marvel guys and the Russo brothers were excited by it, but we needed to show it to Brolin» recalls Port. The test showed the level of low key performance that could be carried through the pipeline. It was clear from the test that small and informed choices would still read on Thanos’ digital face. «It was great for him to see that as an actor, given that he was playing a CG character that was very big. It addressed the issue of whether he had to perform that role bigger than he might otherwise do, and that the subtlety of his facial performance was going to come through». For Brolin, the test was key as it was based on clips of him performing in character but also just talking to the directors normally. «I think that enabled him to do the performance the way he wanted to» said Port.

Looking back at that test today, some 18 months later, Port and the team are surprised to see how much further they managed to improve the process. This was in part due to the iterative learning nature of the new approach and also the techniques the team refined during the production.

On Set

Once principle filming commenced, the process started with any actors who would be digitally replaced, dressed in body capture suits. Josh Brolin was filmed on set in a motion capture type suit, but not always in a capture volume. Sometimes the team relied on matching his body performance later in animation as the script at that point required too many additional elements to make a full body capture relevant. This still meant that about 90% of the motion capture of Brolin was done on the film set, with the motion capture cameras and sensors built into the elaborate sets. «That was really nice for the actors to work in a built set as opposed to in just a blank capture volume stage», comments Port.

For their faces the actors wore helmet cameras rigs (HMC) filming in stereo at HD resolution at 60 fps. These were mounted verticallyand at the front of the actors faces. Each actor, including Brolin, who would be digitally recreated had tracking markers on their faces.

Once the cut was established, the team got timecode for the motion capture, the facial capture, and the plate photography. At this point the team would start tracking the face. Up until this point the pipeline matches most similar level facial pipelines established across many productions. It is at this point that Digital Domain innovated how it treated the footage and input data.

Digital Domain created an all new two-step system to handle the facial animation using their in-houseMasqueradeandDirect Drivesoftware tools. These two tools work in unison to create the quality creature facial animation of Thanos, always building from the actor’s live on-set performance.

Masquerade

The stereo head cams provide a low mesh reconstruction ofBrolin’s face. But instead of just trying to solve from this low resolution mesh to a set of FACS AUs, the Digital Domain pipeline intelligently interpolates the standard low res mesh to a high resolution mesh. It does this using the new Masquerade software.

«Masquerade is an all new proprietary application that allows us to capture facial performances with more flexibility and fewer limitations than anything we’ve seen or done in the past» explained Darren Hendler, Head of Digital Humans. Masquerade has dramatically improved the quality and the subtlety of what Digital Domain is able to capture from an actor.

The program uses machine learning algorithms to «convert the mesh to a high resolution mesh with the same fidelity that we would have gotten if we had used a high quality, high resolution capture device». comments Port.

Essentially, DD take frames from a helmet-mounted camera system, and using AI, outputs a higher resolution, and more accurate, digital version of the actor’s face. Masquerade uses machine learning to take previously collected high-res tracking data from a Medusa scanning session and turns the 150 facial data points taken from a motion capture HMC session into roughly 40,000 points of high-res 3D actor face motion data.

While HMC units are useful, the HD cameras provide vastly less fine detail than if an actor was just sitting in a Medusa rig. The down side of the Medusa rig is the actor is seated, in a special lighting rig, keeping their head relatively still but being filmed with an array of high quality computer vision cameras. On the one hand, the HMC cameras provide the actor with a better acting environment, as they are used on the real set, with fellow actors interacting freely, but this comes at the cost of less tracking detail. This is in comparison to a Medusa scan where the data is much richer and more detailed, but the actor is confined to a special seated unit with controlled lighting and limited movement.

Here’s howMasquerade works.

Training data collected from high-resolution scans show exactly what the actor’s face is capable of doing through a regiment of facial movements. This allows the computer to see many details including how the actor’s face moves from expression to expression, the limits of the actor’s facial range, and how the actor’s skin wrinkles. This 4D data is very valuable as other pipelines just capture key poses and then the software is left to map the transition between poses, and how the skin moves and stretches to transition from one pose to another.

Masqueade test footage from DD

Masqueade test footage from DD«During a motion capture session with the actor they wear a motion capture suit with a helmet-mounted camera system, and perform live on set with their fellow cast. During this session, we are able to do body capture and facial capture of the actor at the same time» comments Hendler. Not only is this helpful for the actor in giving a performance, but the face data is accurate and matches the body motion for delivery. If the actor was to turn quickly while walking and delivering a line, the secondary motion of the face, caused by the walking and turning is all captured together with that body motion. The same line delivered later seated in a Medusa would not have that inertia and same body sync. Clearly, what is wanted is the combination of the two inputs, the detail from the specialist Medusa rig and the live and synced facial performance from on set. While this has been manually done for films, Masquerade does this with AI training data. Prior to Masquerade, high-resolution and accurate data, for the actor’s face from their live performance alone was impossible.

The solution fromMasquerade is not going to be perfect in combining the two streams of data, but that is not the end of the process. «At this stage we QC and eye ball that solution and if there is an error we can make a correction, andfeed that back into the machine learning algorithm,» explains Port. «At this point it is still a ‘learning process’ and so the more data and tweaks that you feed it — the better it gets and the next solution will have more fidelity». This meant that during the course of the production the team were able to get increasingly better results as the machine learnt.

Technically speaking Masquerade is not a neural network or similar common AI computer vision approach it is a local space basis function. It is a local space look up that works on patches. In effect, Masquerade says ‘in this area I have warped the mesh as best I can so now I have enough information to look up and learn what the face at this point should look like’ or «what is missing from the base mesh to make it match the high resolution training data», adds Hendler. What is ‘missing’ from the low res mesh is normally high frequency information that we know as wrinkles or say crows feet around the eyes. It then adds those cleverly and in a consistent way.

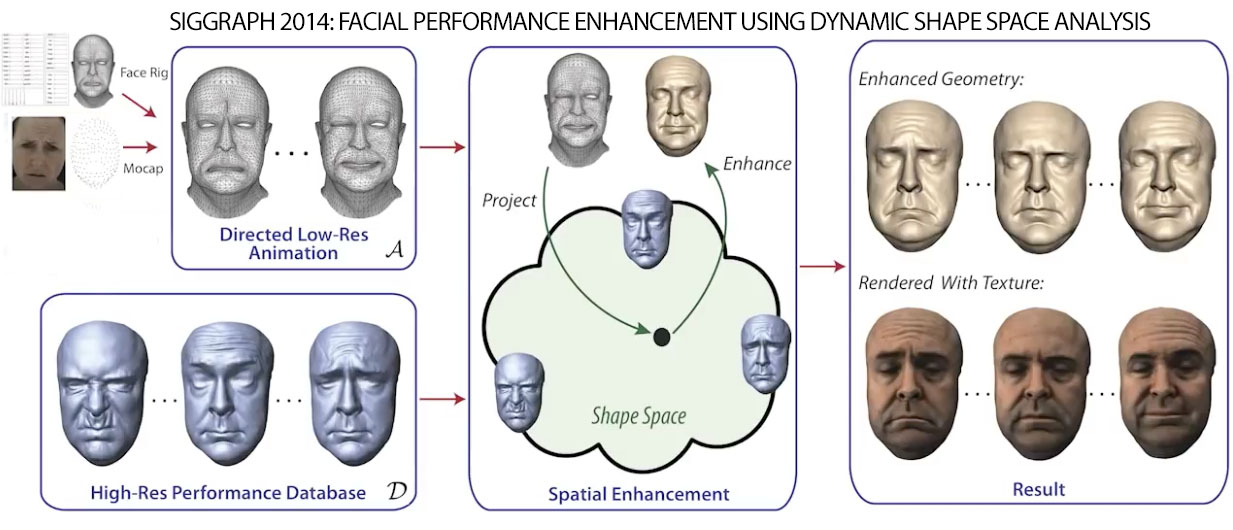

One of two papers that informed the new Masquerade software (From Disney Research Zurich, 2014)

One of two papers that informed the new Masquerade software (From Disney Research Zurich, 2014)Technically, Masquerade builds on two pieces of research. It starts with a 2008 SIGGRAPH paperPose-space Animation and Transfer of Facial DetailsbyBernd Bickel, Manuel Lang, Mario Botsch, Miguel A. Otaduy, and Markus Gross. A second research paper built on this and was published more recently in 2014:Facial Performance Enhancement Using Dynamic Shape Space Analysis. This was published in the ACM Transactions of Graphis and was by a group including senior researchers from Disney Research Zurich. (Amit H. Bermano, Derek Bradley, Thabo Beeler, Fabio Zund, Derek Nowrouzezahrai, Ilya Baran, Olga Sorkine-Hornung, Hanspeter P.ster, Robert W. Sumner, Bernd Bickel, and Markus Gross.)

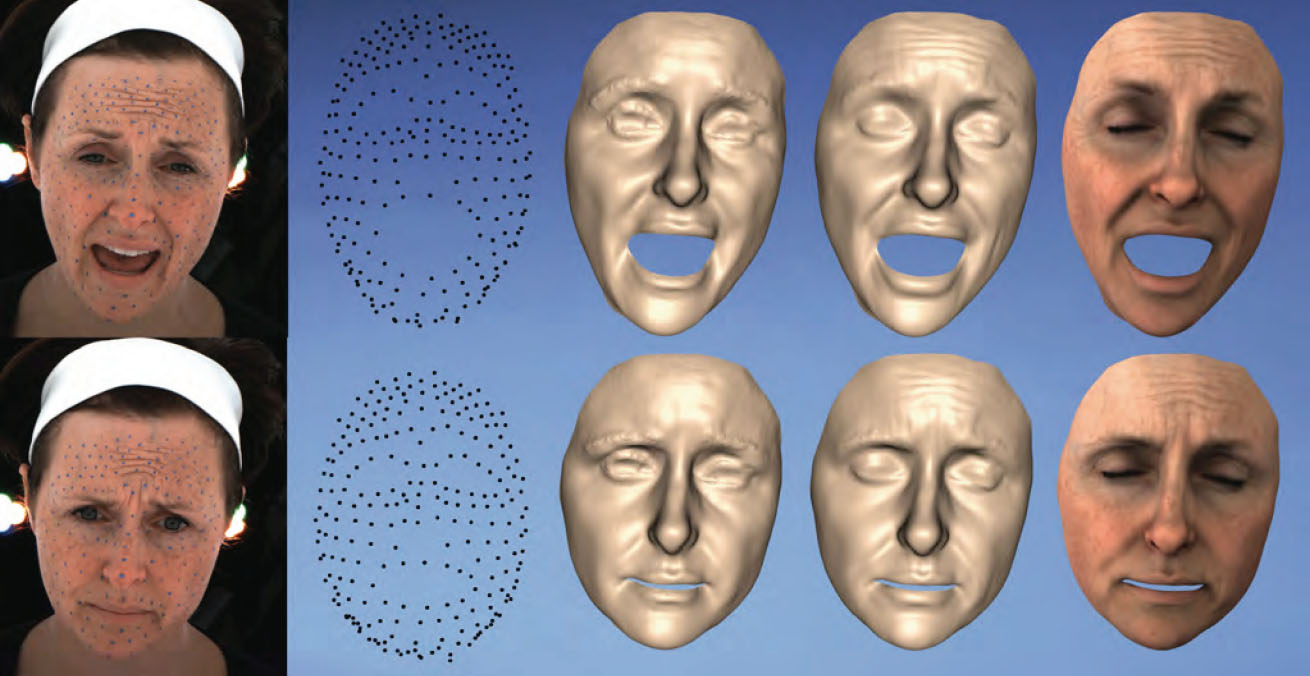

The progression can be seen in this test from the 2014 paper

The progression can be seen in this test from the 2014 paperIn the test above, the image markers (dots) on the face of the actress can be seen to produce a fairly smooth or low frequency mesh , this is then enhanced with more details including wrinkles to produce a high resolution final output.

Disney Research Zurich is now well established as one of the most respected facial reconstruction and animation teams in the world. Their work informs and is used by, not only Digital Domain, but extensively by ILM for their facial pipeline.

This upres / data driven approach highlighted in these two» key papers was combined by Lucio Moser, Darren Hendler and Doug Rouble at Digital Domain into their hybrid newMasquerade solution which the team used onAvengers: Infinity Wars. Their solution wassuccessfully used and produced lower reconstruction errors with less computation and requires less memory than the original 2008 paper, while still taking a similar approach, and taking advantage of the Disney Research Zurich 2014 work.

This whole approach solves one of the fundamental problems of a FACS approach which is the non-linear combinatorial effect of combining AUs. With this approach Digital Domain had real data on how to move between expressions from the training data Medusa sessions that had been in Atlanta with the Medusa rig they built there for Avengers. But the key is that it does this based on Josh Brolin’ acting on set with the other actors, and not alone in the Medusa rig.

Once the team have a high resolution moving match of the actor, in this case Josh Brolin, the team can then re-target this from the 3D Brolin to the 3D Thanos.

Direct Drive

The second new process in the Digital Domain pipeline wasDirect Drive.Direct Drive takes data from Masquerade and transfers it to the target creature, in the case ofAvengers: Infinity War, from Josh Brolin to Thanos, by creating a mapping between the actor and the creature. A version of Direct Drive was used with success previously by Digital Domain on films such asBeauty and the Beast.

The mapping includes defining a correspondence between the actor and the character, including how different elements of each unique anatomy align. Direct Drive then figures out the best way to transfer Brolin’s unique facial performance to Thanos’ unique face.

How is Direct Drive any different from normal retargeting? «It is similar toMasquerade in a way, we don’t rely on a face shaped rig to create the characters final movement. We don’t take Josh Brolin’s face and do a solve into a FACS based rig. Because we are not relying on a FACS based rig for the final performance, .. we are not lockedinto a ‘solve’ to facial rigs». They map between frames of Digital Brolin mesh to Digital Thanos mesh with correspondence and then, and only then, they resolve that solution back to an animation rig so the animators can tweak and adjust it. It is a reverse of the normal process. «This new animation rig is more of an offset rig or controlling rig than a defining rig» explainsHendler. «During Direct Drive, we transfer a range of performances and facial exercises from the actor’s face (Brolin) to the creature’s face (Thanos)» he explains. » And we have the opportunity to modify how it is transferred».

This is also a key stage for Digital Domain to add a level of additional animation input to ensure that the performance is portrayed as accurately as possible on the character and is in line with what the director wants. The process is designed to allow animator input. While the starting point is the most direct transfer of performance, the very nature of the different skull and shape of Thanos’ face means that the character animation team is still vital in delivering the essence of what was done on set originally by Brolin. The tools provide what is mathematically correct, but just as with lighting, there is a ‘correct match’ and then there is the most effective delivery, to tell the story.

«For animation, we then built a specialized rig that transfers as much of the performance as possible to animation controls. It still maintains all the high-res data already captured and ensures that we do not lose any of the subtleties of the actor’s performance» says Hendler.

For the final delivery of the performance the shot is passed on to the animation group led by Animation Director Phil Cramer, to do the fine-tuning and make any director-driven changes.

Cramer and his team are always able to reference the earlier stages in the process and quite often would view side by side clips of Brolin with Thanos. «Ultimately, this process just got faster and faster as we did more shots» comments Port. «Which is great, and helps enormously with dealing with 400 plus shots. It really becomes critical to turn around that many shots, and without the tools, just doing it with key-frame would have required a tonne more people and effort, so this has been really a huge step forward for us» he adds.

One of the tools the animators had control over was a skin slide over the muscles of the face, but the team did not adopt much in the way of flesh simulations on top of what was captured naturally. «But we are looking further into that, and it is something that we want to use more of moving forward, as we did still have to do quite a lot of shot based modeling,» comments Port, referring to more than just the facial pipeline. «There were directionswith his muscles that we did not really account for in planning. Marvel felt that even if Thanos was just standing, to keep him alive there needed to be small muscles firing, flexing and moving… these are all adjustments that we addressed either through muscles sims, shot modeling and/or then running skin slide simulations on top of that», he adds.

Lighting and Shaders

In addition to the animation, the team worked hard on the shaders, lighting and technical compositing of their character pipeline.

Even with the animation capture process above, the final model of Thanos still needed pore level detail sculpted. Here the team used some photogrammetry scans of Brolin to reference pore level detail and add it to the Thanos model by hand in Z-brush. «Chris Nichols,who was our Look Dev. — Texture supervisor, did an amazing job. He was the first person to jump on to Thanos and really treat his look, .. he did most of the displacements and fine pore level details», commented Hendler.

The team rendered in V-Ray for all of their work. Part of the challenge for the character was getting the color of his skin right.The team had a small maquette that was photographed on set, but each lighting environment provided its own challenges. «We noticed early on if he was too purple it just looked fake are cartoony — but we also had to be careful to not make him too desaturated» explained Port. His skin lookwas not just an issue of pigmentation but also vellus hair or ‘peach fuzz’ over his skin.

Which begs the question:Does Thanos Shave?

The team decided yes and made sure Thonos was modeled with stubble and as much human vellis hair as possible. This was based on the theory that the more they could make Thanos familiar to an audience in detail, the more photo-real his skin was, (perhaps in ways few people would even notice), the easier it was to sell the giant creature as real. As a result he has stumble over his head, and this helps break up the specular highlights and provide ultra high detail in the closeups.

The team did not deploy digital blood flow on Thanos, «given his coloring, I just don’t think you’d see it as much. Where you really see that kind of stuff is during extreme expression. We have done that before, and I think we might if we are lucky enough to work on him again. I’d like to incrementally try and introduce that again if possible», commented Port.Hendler agrees that for the next version, Digital Domain would like to bring more expression based, blood flow type coloring into play. «It is a really interesting aspect, that can often make faces look much more real because without it a face can look plastic, but it is often hard to tell why» he comments.

The team also developed a new detailed eye model forThanos which was built on Digital Domain’s prior modeling work on eyes. «We have a really nice history of really focusing on the eyes and modeling a crazy amount of data around the eye lids, the eye tissue and hundreds of shapes of how the skin folds in that area, modeling the wetness of the eye, the conjunctiva and the thin layers of tissues that cover the eye. Our look dev team have just done beautiful work in that area» explains Port.

The lighting in the opening Loki death sequence was one of the most successful from Port’s personal point of view, as the partially destroyed space ship allowed the team to position fires and lights in such a way as to maximize the impact of each shot. This was in comparison to the final end fight sequence or in the flashbacks (below) where the lighting was dictated by the sky dome and more natural lighting.

Weta Digital

Weta and Digital Domain both did Look-Development work on Thanos. Weta’s shots were concentrated around the fight on Titan, and separate from Digital Domain’s work. It is a testament to the overall visual effects supervision and direction of the film that the two Thanos’ looked and behaved in such a unified way.

The pipeline at Weta was different from Digital Domain, and while the two companies worked together very positively, technically they were completely separate. The pipeline at Weta was built on their standard FACS face pipeline and the final Thanos was fully rendered in Weta’s Manuka renderer. Each company saw progressive updates from the other but from rigging to rendering the solutions were implemented very differently.Matt Aitken was the Weta Visual Effects Supervisor for Weta, who completed over 200 Thanos shots, along with another 250 of various other normal effects shots.

Sidney Kombo-Kintombo was one of the two Animation Supervisors at Weta Digital on Thanos, «one of the main things we had to do was translate Josh Brolin’s performance to Thanos, and with the characters big chin, not make people laugh, but deliver a powerful serious performance» he explained.

Weta had scans of Brolin and from these scans they built a FAC rig that modeled the muscles of the face and allowed the level of subtly the directors wanted. Their approach was focused on the getting the muscle system highly accurate so that it could match the micro movements of the face and thus provide the detailed performance accurately.

Matt Aitken was in Atlanta for the live action motion capture on the stages. From the dataAitken brought home, the Weta team built their own unique FACS model which is in line with Weta’s highly developed face pipeline.

The Weta team matched the set of digital muscles on their digital Brolin to their digital Thanos. «Then we went in and animated the Brolin puppet. Once we were happy that this matched the reference to the real Josh Brolin, we would transfer this to the Thanos puppet and just tweak small things here and there on Thanos’ face «explained Kombo-Kintombo.

On top of the animation that was transferred the team overlaid some additional animation or expressions. While the mapping may have accurately moved the same muscles, sometimes it was thought that the retargeted expressions no longer communicated the same intent, simply because of the difference in face shapes between Josh Brolin and Thanos. Weta did not use any flesh simulations on Thanos. They only used key frame animation, confirmedKombo-Kintombo. «We are extremely cautious here, as there is a feeling that it can make the face seem a little jelly-like» he explaied.

The plate photography was almost entirely green screen for Titan with a small but detailed section of the set, just around where the characters were fighting. The team then built on this and then had to build not only the rest of the planet, but also the reveal of what Titan had been like before the effects of overpopulation.

For the facial animation there was both the lengthy dialogue with Dr Strange and the fast cutting action sequences of the fight.

One of the aspects that required detail cross checking between Digital Domain and Weta was the detail of Thanos’ chin and the movement in the muscles and jaw. «We tried to get as close as possible around the mouth to what we saw Josh Brolin doing, and that was quite challenging, especially around the lips. How to make the lips read properly for the facial lips funnel shape and the pucker shape. Especially when these shapes effect the chin. That was quite challenging but when you get to the point where you buy it, it is quite rewarding» explained Kombo-Kintombo.

Another complex sequence was when Mantus is on Thanos’ shoulders. It was one of the first sequences that Weta started with, and it involved Mantus pulling on three of four sections of flesh at the brow region. «We had to add some more detail just so the audience could see and feel the stress on his face» Kombo-Kintombo commented. «We added to it, just so you could see how much the face was affected and exactly which muscles were being stressed»

In addition to Thanos, the Weta team had to generate character animation for the other characters in the elaborate fight sequences which also involved vast amounts of destruction sims, smoke, fire and explosions.

For the other characters the focus was not on their faces due to their masks, but on making sure Iron Man and Spiderman had enough weight and felt appropriately heavy, especially into relationship to Thanos. Here the motion capture really did not deliver the weight in the performances so the Weta team relied on the animators to make each character believable and different in scale.

The effects team had a large number of very short effects shots in the fight sequence. With the various combination of magic from Dr Strange, fire power from Ironman and the general destruction from Thanos the Titan sequence ended up with a rapid cut with a huge variety of effects animations. The team even had to break apart a moon and rain a thousand planet sized fragments down on our heroes.

Оставить комментарий

Ты должен быть Вход опубликовать комментарий.